Welcome to the AES Blog

Greater than the sum of the parts: Evaluating the collective impact of complex programs

There are various types of programs, including grants, large-scale innovation events, mentoring programs, and the appointment of a Chief Entrepreneur for Queensland. DTIS needed to understand how this multi-pronged investment changed the innovation ecosystem and knowledge economy in Queensland. The Department needed robust evidence to measure reach, progress, and value for money, communicate success, identify challenges where further work is needed, and confidently direct future investment for the benefit of all Queenslanders.

Understanding system-level changes from complex programs requires measuring collective impact

Multi-faceted initiatives such as AQ aim to achieve collective impact – that is, individual programs are more successful due to the simultaneous investment in multiple, sometimes seemingly unconnected, parts of the ecosystem, which in the process contribute to multiple strategic objectives. A collective impact evaluation approach, such as the one we used to assess the AQ portfolio of programs can provide government with a more holistic picture of the impact of its investment at the system level than a typical program-level evaluation.

Informed by our work on AQ and other collective impact evaluations, we have distilled four success factors for effective evaluation of the impact of a portfolio of investment. We explore each one in detail below.

1. Create an explicit link between each program and the portfolio objectives

Gaining a clear picture on how each program aligns with the portfolio objectives is a critical first step in planning collective impact measurement.

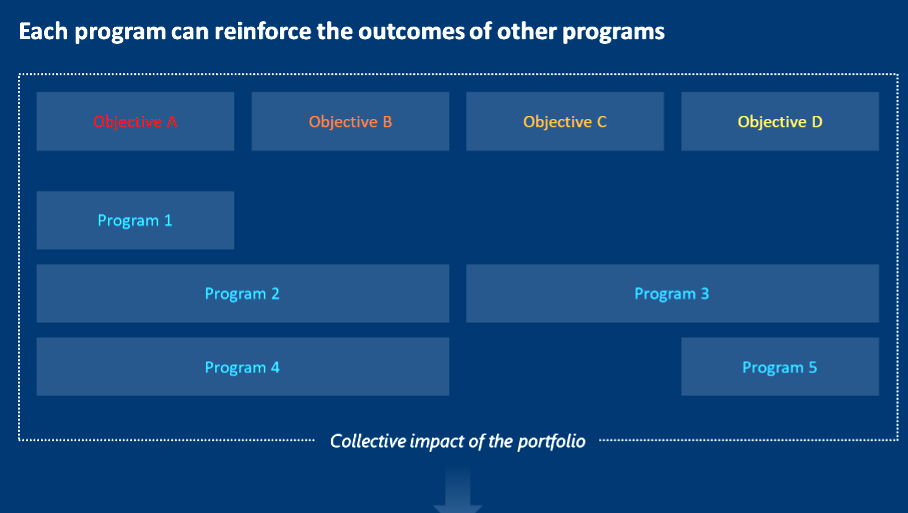

Starting with clear portfolio objectives and definitions of success, makes it easier to articulate how each program is intended to contribute to those objectives. Laying this out visually highlights the intersections and interdependencies between programs, allowing stakeholders to see the part each program is expected to play and how it reinforces the outcomes of other programs.

This approach develops a common language to describe success and a common approach to collecting data across the programs (in contrast to a typical program evaluation approach, which will generally develop a theory of change and program logic for a singular program in isolation).

Ideally this step to identify portfolio objectives and the program linkages is done in the policy planning and program design phase, when it can best contribute to clear program coherence. It provides an opportunity to identify the data required to measure success at the start of the program, increasing the likelihood useful data will be collected throughout.

The Queensland Government did this well in setting up the Advance Queensland Evaluation Framework, which outlined the 10 objectives of AQ and aligned each program to these objectives. New programs that developed over time were added to the Framework. We then built on these objectives by specifying, and sometimes designing, quantifiable measures of success.

However, it is possible to retrofit this approach to apply a collective impact evaluation lens even if the polices and programs were not originally designed in that way.

2. Plan evaluations and data collection at three levels – micro, meso and macro

Investment in evaluation should save the taxpayer dollars in the medium term through reducing ineffective program spend. Nonetheless, evaluation can be expensive in the short term, not only in dollars, but in hours and resources from the commissioning agency and the program's stakeholders.

Evaluation spend should be commensurate with program risk and value. It should also be timed so outcomes can be seen and the results of the evaluation can feed into the policy cycle.

With a clear picture of the expected outcomes from the portfolio of investment, and of how each program will contribute to these outcomes, evaluation investment and stakeholder engagement can be planned to deliver useful insights to policymakers.

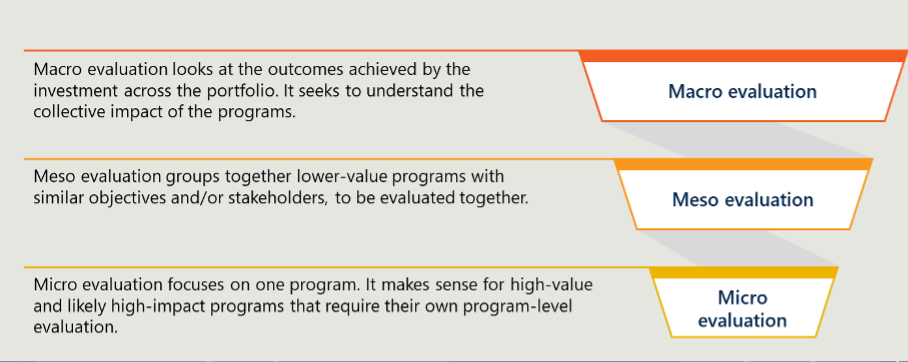

DTIS led the planning of the evaluation of the portfolio by identifying the need for three levels of evaluation:

All three evaluation types are needed to produce the information policymakers require. Where the macro evaluation provides the big picture, micro and meso evaluations allow government to understand which programs contributed most, and in what ways, to the overall impact. When combined, this information provides invaluable evidence to decision-makers for future investment.

To support this, evaluation plans developed at the three levels should speak to each other. That is, use the same language to describe outcomes at all three levels, so that data can be aggregated up from micro to macro.

Having an evaluation partner work alongside the organisation to conduct the evaluations at three levels enables a greater consistency in approach and can therefore lead to more meaningful insights. It can also create efficiencies.

Nous did this with DTIS in the evaluation of AQ. We conducted a selection of micro and meso evaluations, which were then able to inform the macro evaluation.

3. Clearly define the measures of collective impact

In order to be able to objectively assess whether policy outcomes have been achieved, they need to be translated into quantitative measures of success. This requires a precise definition of each policy outcome, informed by the data needed to support its measurement, as well as the granularity of that data.

Advance Queensland's policy outcome was to grow Queensland's knowledge economy. To understand whether this was achieved, we worked with the Queensland Government to agree on a working statistical definition of the term.

Translating outcomes into numbers is valuable in understanding the impact, but it simplifies outcomes, which creates a risk that context and nuance are overlooked. Therefore, collective impact evaluation also needs to gather qualitative data to provide additional context and to support analysis of the root causes underlying why something was, or was not, achieved.

4. Quantify impact on the system, beyond direct recipients

The trick with measuring large-scale government initiatives like AQ is that ideally what they aim to achieve are benefits not only to direct recipients, but to broader society. The idea is to create flow-on effects – such as the benefits to suppliers, customers, employees and other young inventors that might accrue when an inventor is supported to open a start-up business.

That is why it is important to define – and measure – the broader system and the program benefits.

Another reason to look at the broader system is to avoid the risk that unforeseen constraints elsewhere in the system inhibit the generation of better long-term outcomes. For example, more renewable energy may be generated by a new program, but without the transmission line capacity to transport it, the benefits remain very limited.

There are many factors that contribute to social and economic impact, so isolating the impact of a particular program needs careful and extensive analysis. This can be done in multiple ways, including through analysing trends before and after the program's introduction, and creating quasi-control groups.

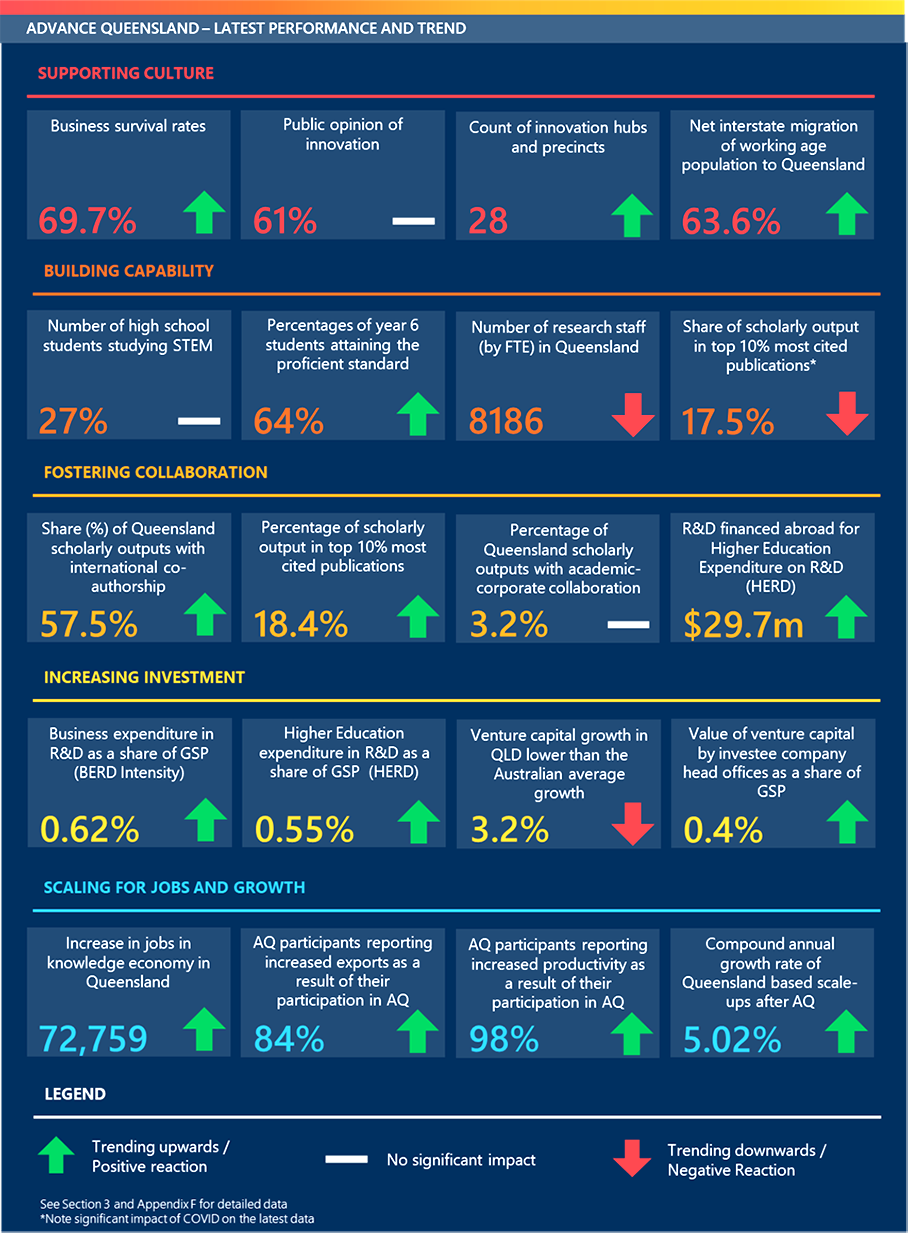

We used these techniques to assess AQ's contribution to the Queensland knowledge economy, including growth in knowledge jobs, exports and productivity. The dashboard below summarises key aspects of the knowledge economy impacted by AQ and shows how they have been trending since AQ was established across a range of metrics.

x

Sophisticated evaluation will deliver insights and demonstrate the value of investing in the process

As policy and program design increasingly aims to achieve collective impact to solve complex challenges, evaluation approaches need to evolve. Evaluating complex policies with multiple programs, objectives and stakeholders can seem overwhelming. Using a collective impact evaluation lens – through clear linkages between program and portfolio objectives, defining collective impact success and analysing broader economic and systemic impact – will cut through the complexity and deliver greater evaluation insight.

------------------------

Brianna Page

Brianna is a Director with Nous Group who specialises in public policy evaluation, particularly at the strategic and portfolio levels. She is skilled at using qualitative and quantitative data to its full potential to produce accurate, useful and actionable insights to improve public policy and program outcomes.

Mateja Hawley

Mateja is a Director with Nous Group who has expertise in delivering complex evaluations, often in fast-changing environmental, policy and organisational contexts. Her background in technology, innovation management and engineering means she can effectively guide analysis of complex datasets and support recommendations with a robust evidence base.

We acknowledge the Australian Aboriginal and Torres Strait Islander peoples of this nation. We acknowledge the Traditional Custodians of the lands in which we conduct our business. We pay our respects to ancestors and Elders, past and present. We are committed to honouring Australian Aboriginal and Torres Strait Islander peoples’ unique cultural and spiritual relationships to the land, waters and seas and their rich contribution to society.