Welcome to the AES Blog

Everyday ethics challenges for evaluators

By Squirrel Main, Eleanor Williams, Kristy Hornby, Mandy Charman

Everyday ethical challenges for evaluators

Ethics are part and parcel of any evaluation journey. Every evaluator, at some point, will face tricky situations where they'll need to balance ethical principles with practical decisions. While formal ethics processes usually revolve around consent and transparency in evaluation design, the real challenges often pop up beyond that. Evaluators frequently work in environments full of volatility, uncertainty, complexity, and ambiguity (known as 'VUCA' conditions), which means we need to stay flexible and responsive throughout the entire evaluation process to keep things on track.

A lot of the discussions about ethics in evaluation in Australia seem to centre on meeting the requirements of the National Statement on Ethical Conduct in Human Research and the needs of Human Research Ethics Committees (HRECs). But the less glamorous, day-to-day ethical challenges we face when we're actually designing, delivering, and reporting on evaluations tend to get less attention. This post provides early thinking on a basic framework for evaluators who want to think about their own ethical decision-making and kickstart a conversation about whether more guidance, advice, or support is needed.

Why do ethical challenges crop up?

How common are ethical challenges?

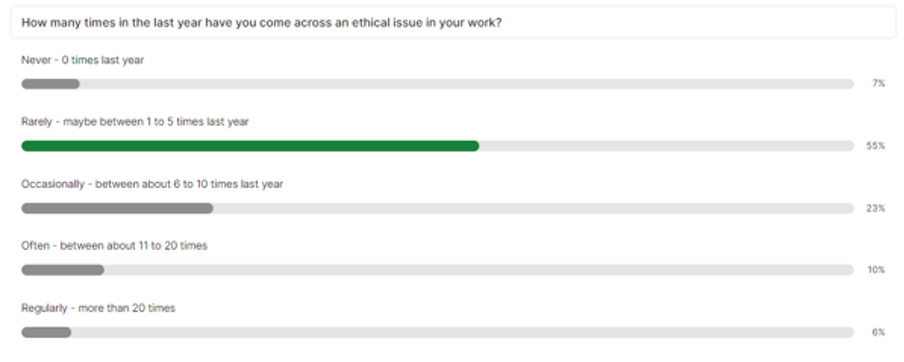

At the 2024 Australian Evaluation Society Conference, the authors ran a quick poll during a panel discussion, asking attendees how often they run into ethical issues. Seventy-one people responded, and the results were interesting. Good news first – most people (55%) said they rarely come across ethical challenges (1-5 times a year). However, 45% of respondents admitted they encounter them more frequently, with four people saying they deal with ethical dilemmas more than 20 times a year!

- Engaging with First Nations communities

A recurring theme was the lack of meaningful engagement with First Nations people in evaluations. For ethical evaluation, it's crucial to include their perspectives and ensure the work brings real benefits to their communities. - Integrity and accuracy

Misrepresenting findings, cherry-picking data, or pushing for only positive outcomes can seriously damage the credibility of an evaluation. Sticking to your interpretation of the facts – even when it's uncomfortable – is key to ethical practice. - Power imbalances

Power dynamics often skew evaluation results, especially when stakeholders try to pressure evaluators into changing findings. Maintaining independence and resisting external pressure is crucial for ethical evaluation. - Transparency and stakeholder involvement

Evaluations need to be transparent, with stakeholders kept informed and involved in decision-making. This can help avoid ethical problems like the suppression of negative findings or selective reporting.

The responses to these challenges were just as varied as the challenges themselves. Some people worked through them by discussing or negotiating outcomes. Others built trust with stakeholders or escalated the issue to senior leaders. A few even walked away from the work altogether! Our favourite response, though, was someone who said they coped by 'writing cathartic ranting blogs under an anonymous pen name.' This variety shows how wide-ranging ethical challenges can be, as are our responses as evaluators.

How can we define the severity of ethical challenges?

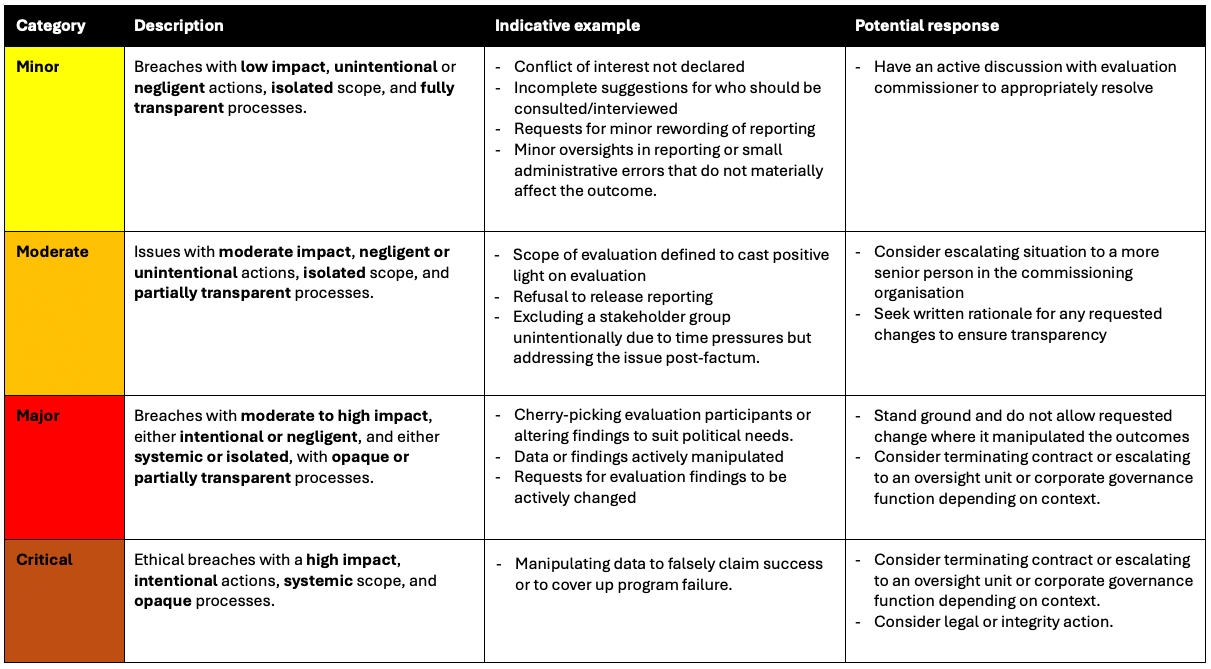

- Minor

Low-impact breaches that are unintentional and isolated, with full transparency.

Example: Minor oversights in reporting or small errors that do not materially affect the outcome or requests for small wording changes from evaluation commissioners. - Moderate

Negligent or unintentional issues with moderate impact and partially transparent processes.

Example: Excluding a group of stakeholders from consultations by accident but addressing the issue later. - Major

These breaches might be either intentional or negligent, and they can range from isolated incidents to systemic issues.

Example: Cherry-picking evaluation participants or changing findings to suit political needs. - Critical

High-impact breaches that are systemic and intentional, often with opaque processes.

Example: Manipulating data to falsely claim a program's success or to cover up program failure.

While this blog will not dive into fully defining each level, the examples above show what might fall into each category and the types of responses these challenges warrant.

How should we respond to ethical callenges?

These are the smaller, unintentional breaches. Think of things like a minor conflict of interest or a few selective suggestions for who should be consulted or interviewed. These can usually be resolved through an open conversation with the commissioner to iron things out.

Here, we're talking about issues with a bigger impact, but still mostly unintentional. Maybe the scope of the evaluation was defined in a way that shines a more positive light on the results, or perhaps a vulnerable group was unintentionally excluded. In these cases, it might be time to escalate the issue to someone higher up or ask for a written explanation of any requested changes to keep things transparent.

These are the kinds of breaches that involve deliberate manipulation or negligence – things like cherry-picking participants or changing findings to fit political needs. In these cases, it is essential to stand firm and not allow any changes that would manipulate the evaluation findings or outcome. If things get too sticky, it might even be time to consider terminating the contract.

These are the really serious breaches with high impacts, like manipulating data to falsely claim success for a program or to cover up program failure. When it comes to these situations, it is probably best to walk away from the project and, in extreme cases, consider taking legal action.

How do we refine our framework?

Who is affected, and how bad is it? For example, if the issue has the potential to harm specific populations, like First Nations people, or breaches the rights of program participants, that's clearly high impact. If only a small group is affected, the impact might be moderate, and if there's little visible harm, it could be low impact.

Was the breach intentional, negligent, or accidental? If there's clear intent to manipulate findings, that is obviously more severe. If it was unintentional but careless, like forgetting to seek ethical clearance, it is still an issue, but less so.

Is the problem systemic, affecting the whole evaluation, or is it a one-off issue? Systemic issues, like pressure from higher-ups to avoid awkward findings across multiple evaluations, are more serious than isolated data collection errors.

How open is the process? Is there full transparency and accountability, or are key details hidden from stakeholders? The less transparency, the bigger the ethical issue.

Want to join the conversation?

If this topic resonates with you, or you've got feedback on the categories or examples we've shared, Grosvenor is looking to dig deeper into the frequency and severity of these ethical issues, in partnership with the Australian Centre for Evaluation, The University of Melbourne's Assessment and Evaluation Research Centre, the Centre for Excellence in Child and Family Welfare's Outcomes Practice Evidence Network (OPEN) and the Paul Ramsay Foundation. We are keen to hear more about what people are experiencing out in the field. If you'd like to participate in a follow-up survey or case study interview, we would love you to complete this survey:https://www.surveymonkey.com/r/everydayethics2025

We also need your help in distributing this survey as far and wide across the Australian evaluation community as possible – please share amongst your networks and help us get the word about this important exploratory research out there! Survey closes end of May 2025.

Recommended further reading

- American Evaluation Society, (2018) Guiding Principles for Evaluators, Accessible via: https://www.eval.org/About/Guiding-Principles

- Hutchinson, K. (Ed.) (2019). Evaluation failures. SAGE Publications, Inc., https://doi.org/10.4135/9781544320021 https://au.sagepub.com/en-gb/oce/evaluation-failures/book260109#contents

- International Program for Development Evaluation Training, (2012) Evaluation ethics, politics, standards and guiding principals. Accessible via: https://www.betterevaluation.org/tools-resources/evaluation-ethics-politics-standards-guiding-principles

We acknowledge the Australian Aboriginal and Torres Strait Islander peoples of this nation. We acknowledge the Traditional Custodians of the lands in which we conduct our business. We pay our respects to ancestors and Elders, past and present. We are committed to honouring Australian Aboriginal and Torres Strait Islander peoples’ unique cultural and spiritual relationships to the land, waters and seas and their rich contribution to society.