Welcome to the AES Blog

How to avoid the evaluation fads and remain in fashion with Brad Astbury

by Jade Maloney, Jo Farmer and Eunice Sotelo

With so many authors and approaches to evaluation, knowing what to pay attention to can be hard. Evaluation, just like the catwalk, is subject to the whims of the day. How do you know what’s a passing fad and what will remain in fashion?

At the AES Victoria regional seminar in November, Brad Astbury suggested the following 10 books will stand the test of time.

1960s

In the age of bell bottoms, beehives and lava lamps, and protests against the Vietnam war, evaluation was on the rise. The U.S. Congress passed the Elementary and Secondary Education Act (ESEA) in 1965, the first major piece of social legislation to require evaluation of local projects undertaken with federal funds and to mandate project reporting. The hope was that timely and objective information about projects could reform local governance and practice of education for disadvantaged children, and that systemic evaluation could reform federal management of education programs.

While you wouldn’t return to the fashion or the days when evaluation was uniquely experimental, hold onto your copy Experimental and quasi-experimental designs for research by Donald T. Campbell and Julian C. Stanley. These two coined the concepts of external and internal validity. Even if you’re not doing an experiment, you can use a validity checklist.

1970s

Back in the day of disco balls and platform shoes, the closest thing evaluation has to a rock star – Michael Quinn Patton – penned the first edition of Utilisation-focused evaluation. Now in its fourth edition, it’s the bible for evaluation consultants. It’s also one of the evaluation theories with the most solid evidence base – drawn from Patton’s research. In today’s age of customer centricity, it’s clear focusing on intended use by intended users is a concept that’s here to stay.

Carol Weiss’s message – ignore politics at your peril – could also have been written for our times. Her Evaluation research: methods of assessing program effectiveness provides a solid grounding in the politics of evaluation. It also describes theory-based evaluation – an approach beyond the experimental, that is commonly used today.

1980s

While you may no longer work out in in fluorescent tights, leotards and sweat bands, your copy of Qualitative evaluation methods won’t go out of fashion any time soon. In his second appearance on the list, Michael Quinn Patton made a strong case that qualitative ways of knowing are not inferior.

You may also know the name of the second recommended author from this decade, but more likely for the statistical test that bears his name (Cronbach’s alpha) than his contribution to evaluation theory, which is under-acknowledged. In Toward reform of program evaluation, Lee Cronbach and associates set out 95 theses to reform evaluation (in the style of Martin Luther’s 95 theses). That many of the 95 theses still ring true could be seen as either depressing or a consolation for the challenges evaluators face. For Astbury – ever the evaluation lecturer – thesis number 93 “the evaluator is an educator; his success is to be judged by what others learn” is the standout, but there’s one in there for everyone. (No. 13. “The evaluator’s professional conclusions cannot substitute for the political process” aligns with Weiss’s message, while No. 9. “Commissioners of evaluation complain that the messages from evaluations are not useful, while evaluators complain that the messages are not used” could have been pulled from Patton’s Utilisation-focused evaluation).

1990s

Alongside Vanilla Ice, the Spice Girls and the Macarena, the 90s brought us CMOCs – context, mechanism, outcome, configurations – and a different way of doing evaluation. Ray Pawson and Nick Tilley’s Realistic evaluation taught us not to just ask what works, but what works, for whom, in what circumstances, and why?

To balance the specificity of this perspective, the other recommendation from the 90s is agnostic. Foundations of program evaluation: Theories of practice by William Shadish Jr., Thomas Cook and Laura Leviton describes the three stages in the evolution of evaluation thinking. It articulates the criteria for judging the merits of evaluation theories: the extent to which they are coherent on social programming, knowledge construction, valuing, use, and practice. The message here is there is no single theory or ideal theory of evaluation to guide practice.

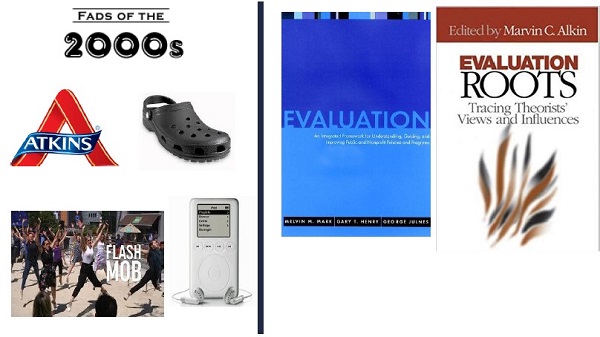

2000s

While iPods and flash mobs are a faint memory, these two books have had a lasting impact: Evaluation: An integrated framework for understanding, guiding, and improving public and non-profit policies and programs by Melvin Mark, Gary Henry and George Julnes; and Evaluation roots: Tracing theorists’ views and influences edited by Marvin Alkin.

The former covers four key purposes of evaluation: to review the merit of programs and their value to society (as per Scriven’s definition); to improve the organisation and its services; to ensure program compliance with mandates; to build knowledge and expertise for future programs. The take-out is to adopt a contingency perspective.

The latter is the source of the evaluation theory tree – which sparked commentary at this year’s AES and AEA conferences for its individualism, and limited gender and cultural diversity. Still, Brad reminds us that there’s value in learning from the thinkers as well as the practitioners; we can learn in the field but also from the field. According to Sage, Alkin’s Evaluation roots is one of the most sold book on evaluation.

It’s a reminder that there is much still to learn from those who’ve come before – that we can learn as much from those who’ve thought about evaluation for decades as we can from our practical experience.

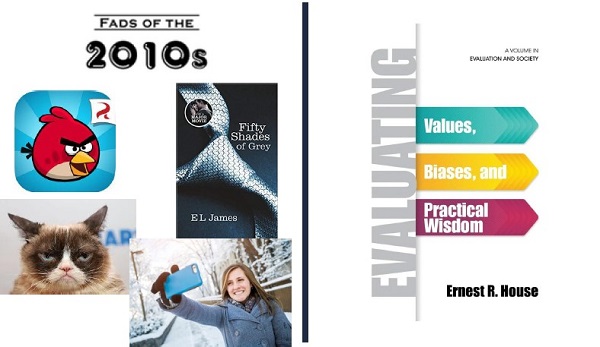

2010s

The age of the selfie has not yet faded and nor has Evaluating values, biases and practical wisdom by Ernest R. House. It covers three meta-themes: values (Scriven, House); biases (Campbell and the experimental approach; expanding the concept of validity); and practical wisdom (on Aristotle’s notion of praxis – blending/embedding theory and practice). It gives us the wise advice to pay more attention to cognitive biases and conflicts of interest.

So now to the questions.

Why didn’t Scriven make the list? Because he’s written few books and there wasn’t enough room in the 90s. Nevertheless, Michael Scriven’s Evaluation thesaurus and The Logic of evaluation are among the books Astbury notes are worth reading.

What about local authors? Grab a copy of Building in research and evaluation: Human inquiry for living systems by Yoland Wadsworth and Purposeful program theory: Effective use of theories of change and logic models by Sue Funnell and Patricia Rogers. If you’re new to evaluation Evaluation methodology basics: The nuts and bolts of sound evaluation – from this year’s AES conference keynote E. Jane Davidson – can help you get a grasp on evaluation in practice.

Brad ended by sounding a word of warning not to get too caught up in the fads of the day. Buzzwords may come and go, but to avoid becoming a fashion victim, these ten books should be a staple of any evaluator’s bookshelf.

Brad Astbury is a Director at ARTD Consulting, based in the Melbourne office. He has over 18 years’ experience in evaluation and applied social research and considerable expertise in combining diverse forms of evidence to improve both the quality and utility of evaluation. He has managed and conducted needs assessments, process and impact studies and theory-driven evaluations across a wide range of policy areas for industry, government, community and not-for-profit clients. Prior to joining ARTD in 2018, Brad worked for over a decade at the University of Melbourne, where he taught and mentored postgraduate evaluation students.

We acknowledge the Australian Aboriginal and Torres Strait Islander peoples of this nation. We acknowledge the Traditional Custodians of the lands in which we conduct our business. We pay our respects to ancestors and Elders, past and present. We are committed to honouring Australian Aboriginal and Torres Strait Islander peoples’ unique cultural and spiritual relationships to the land, waters and seas and their rich contribution to society.