Workshop: Fundamentals of quasi-experimental methods in evaluation (Online 15 & 22 August 2024)

Workshop: Fundamentals of quasi-experimental methods in evaluation

Date and time: Thursday 15 August and Thursday 22 August 2024, 10.00am to 1.00pm AEST (registration from 9.45am) Registrants are to attend both sessions. (full day workshop - 2 sessions)

Venue: Via Zoom. Details will be emailed to registrants just prior to the workshop start time

Facilitator: Steve Yeong

Register online by: 14 August 2024, unless sold out prior limited to 25 participants.

Fees (GST inclusive): Members $325.00, AES Organisational member staff $460.00, Non-members $535.00, Student member $155.00, Student non-member $250.00* (GST inclusive) *Students must send proof of their full-time student status to

Workshop Overview

At its core, evaluation, whether you choose to think of it as a stand-alone discipline (e.g., akin to economics or sociology) or the process of making a judgement (i.e., analogous to the way in which the scientific method transcends any single discipline) involves three central activities:

-

Providing a description of the evaluand (i.e., the subject of the evaluation, typically a program, policy or legislative change). In describing the evaluand, the evaluator must seek only to understand the context within which the evaluand operates (e.g., by taking into account political and cultural norms), the intended aims of the evaluand, effected stakeholders, and potential unintended consequences.

-

Setting out a set of criteria by which to judge the evaluand (typically through a set of key evaluation questions). This activity involves two parts. The first is to determine what the commissioner of the evaluation believes to be important for the evaluation (i.e., what information is going to be useful for decision-making); and the second part involves developing a set of Key Evaluation Questions (KEQs) that can be used to obtain, organise and interpret this information.

-

Identifying and implementing the appropriate set of methods to judge the evaluand given its purported causal mechanisms and context. Evaluators deploy a variety of tools to achieve this aim. For example, semi-structured interviews are often used to obtain a deep understanding of the perspective of certain stakeholders, surveys are used to obtain a broad understanding of the perspective of certain stakeholders and workshops can be run with different stakeholders to facilitate the sharing and generation of knowledge and practices across the evaluand.

Randomised Controlled Trials (RCTs) are one tool that resides within the third central evaluation activity. RCTs useful because they enable evaluators to know that a change in an outcome is a result of the program, not something else (e.g., pre-existing differences between a treatment and control group, the effect of some other ‘third variable’ associated with both participation in the program and the outcome of interest).

RCTs are, however, not always possible or even desirable (e.g., it would not be ethical to randomly incarcerate some fraction of the population to determine whether there is a causal link between incarceration and reoffending). Thankfully, there is a set of quasi-experimental methods that can be used to mimic an RCT using existing administrative data (without changing any policy settings). This enables evaluators to get the benefits of an RCT in situations where they have been brought in too late to design and implement an RCT, or the use of an RCT is unethical.

Workshop Content

In this workshop, we will cover: the experimentalist approach to causality, Omitted Variables Bias, regression, control variables, matching, and then an introduction to the set of quasi-experimental methods that can be used to mimic and RCT (i.e, Instrumental Variables, Difference-in-Differences and Regression Discontinuity Designs).

Workshop Objectives

This workshop aims to provide attendees with:

-

the fundamental intuition behind RCTs (including how to plan them);

-

an overview of the (quasi-experimental) methods that can be used to mimic an RCT (after the fact); and

-

guidance around when these methods are appropriate to use.

PL competencies

This workshop aligns with competencies in the AES Evaluator’s Professional Learning Competency Framework. The identified domains are:

-

Domain 1 – Evaluative attitude and professional practice

-

Domain 2 – Evaluation theory

-

Domain 4 – Research methods and systematic inquiry

-

Domain 7 – Evaluation Activities

Who should attend?

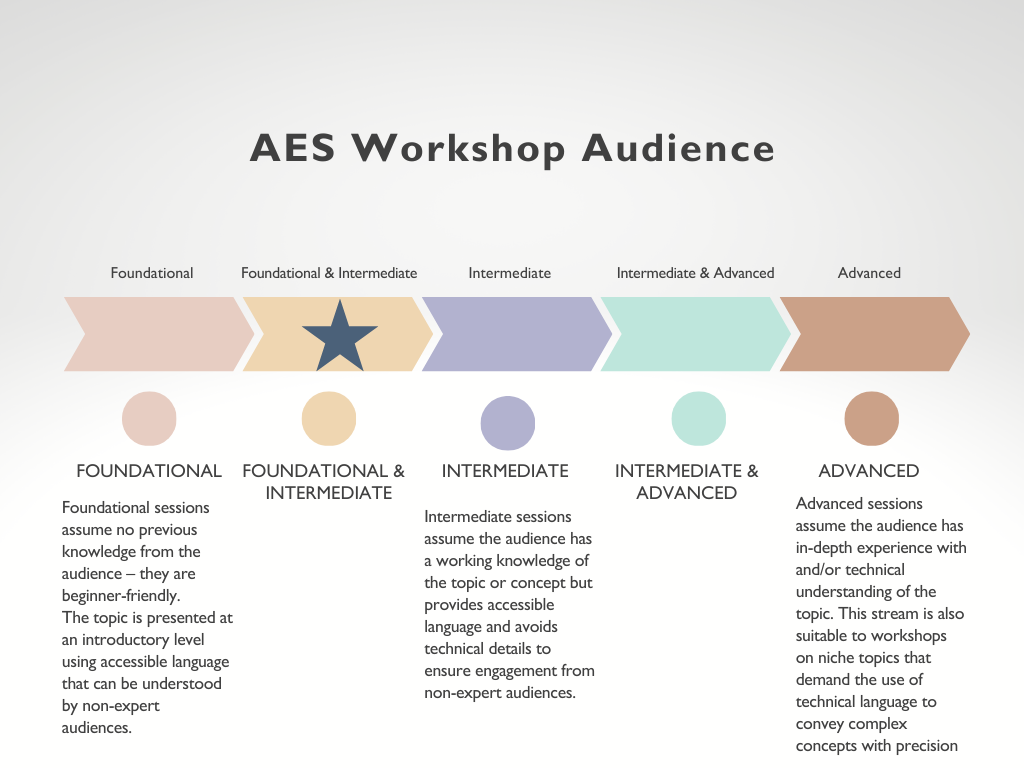

Foundational and Intermediate

There are no prerequisites for this workshop. The workshop is aimed at individuals working in public policy that are tasked with either conducting or reviewing evaluative work (i.e., both commissioners and evaluators) that involves the establishment of a causal link between an intervention and an outcome.

For commissioners of evaluative work, this could be in situations where an RCT (or that type of evidence) is requested by a minister or senior decision-maker. For evaluators, this situation could arise when a client makes a similar request.

Workshop start times

-

VIC, NSW, ACT, TAS, QLD: 10.00am

-

SA, NT: 9.30am

-

WA: 8.00am

-

New Zealand: 12.00pm

For other time zones please go to https://www.timeanddate.com/worldclock/converter.html

About the facilitator

Steve has a diverse professional experience. He currently works as the NSW Bureau of Crime Statistics and Research’s, Research Manager. In this role, Steve leads the quantitative, impact evaluation of high-profile, politically sensitive criminal justice initiatives and legislative changes.

Prior to starting that position, Steve was a Manager at ARTD Consultants, where he conducted mixed-methods evaluations of various programs, policies, and legislative changes for government clients across the country; and an Economics Lecturer at UTS, where he taught courses in data science and public finance.

Steve holds a PhD in Economics from the University of Sydney, focusing on the use of quasi-experimental methods in public policy settings; and First Class Honours and the University Medal in Economics from UTS.

Event Information

| Event Date | 15 Aug 2024 10:00am |

| Event End Date | 22 Aug 2024 1:00pm |

| Cut Off Date | 14 Aug 2024 4:00pm |

| Location | Zoom |

| Categories | Online Workshops |