FestEVAL24: AI & Machine Learning

FestEVAL logo created by ChatGPT4 selected by AES members

FestEVAL logo created by ChatGPT4 selected by AES members

Date and time: Tuesday 28 May, Wednesday 29 May, Thursday 30 May 12-2pm AEST

Venue: Via Zoom. Details will be emailed to registrants just prior to the start time

Event overview: information will be updated regularly

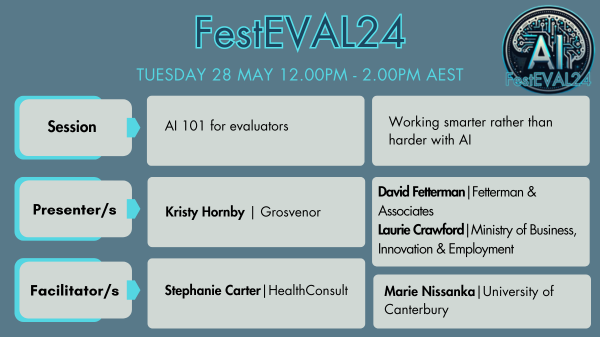

| Tuesday 28 May 12.00pm |

|

AI 101 for evaluators |

|

The session will provide attendees with a foundational orientation to the current state of play in AI. Attendees will be guided through common language in the field, as well as Australian and global policy settings regarding this technology. Following this overview, Kristy will discuss the key opportunities and risks for evaluators in engaging with AI, both as part of their own service delivery and as part of their work to evaluate AI-enabled programs. The session will be interactive, involving audience polls and a democratic approach to ensuring the audience’s burning questions are addressed live in session. • familiarisation with key AI concepts and terminology • a high level summary of global legislative and regulatory contexts • a snapshot of critical opportunities and risks to be aware of, in their role evaluating AI-enabled programs, or when using AI technology directly. |

|

|

| Tuesday 28 May 1.00pm |

|

Working smarter rather than harder with AI |

|

During this practical session, we will explore the practical applications of AI in the field of evaluation. David will showcase one of his innovative AI inventions, demonstrating its utility and the underlying motivations that led to its creation. Laurie will share their personal learning journey, offering insights into the principles that evaluators should consider in this new age of AI-driven evaluation methodologies. Together, we will delve into the intersection of AI and evaluation, gaining a deeper understanding of the potential of AI in this field. |

|

Presenters |

|

David Fetterman is the author of 18 books and is the founder of Fetterman & Associates, |

|

Laurie Crawford is a queer, non-binary pākehā. They grew up in the Manawatū and have since found a home |

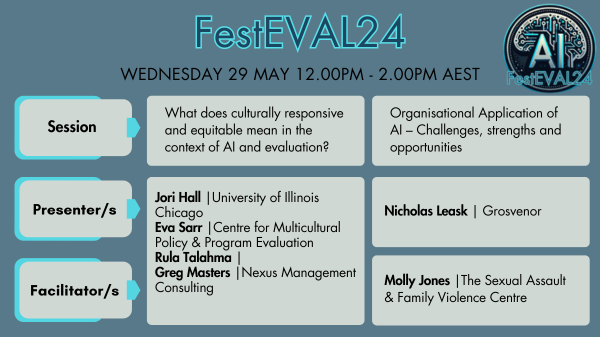

| Wednesday 29 May 12.00pm |

|

What does culturally responsive and equitable mean in the context of AI and evaluation? |

|

This session will expose workshop participants to some fundamental social and cultural issues which are often amplified by AI. 1. what do we mean by culture? 2. the impact of AI on the cultural responsiveness of the outcomes of evaluation 3. why is it important to include design, implementation and equity criteria for artificial intelligence? 4. how, and should, evaluation and AI incorporate considerations of trust and trustworthiness? Each of the panel members will provide a brief, provocative presentation around these issues prior to some discussion amongst the panel. Workshop participants will then have the opportunity to interact with the panel in a Q&A session. The session will be informative and challenging! |

|

Presenters |

|

Jori Hall received her PhD in Educational Policy Studies from the University of Illinois at Urbana-Champaign. |

|

Eva Sarr is an indigenous Serer woman from Sene-Gambia, in West Africa. She is also a 6th generation |

|

Rula Talahma |

|

Greg Masters is the director of Nexus Management Consulting which he established in 1996. Nexus specialises |

| Wednesday 29 May 1.00pm |

|

Organisational Application of AI – Challenges, strengths and opportunities |

|

The presentation will provide attendees with an understanding of the weaknesses, strengths and opportunities of AI capabilities and its applications to evaluation. The presentation will outline different considerations when deploying AI in different organisations and provide examples of AI uses in different environments (i.e government, NGO, private sector etc) |

|

Presenter |

|

Nicholas Leask has been working in the ICT domain for over 30 years, and in ICT procurement for over 20. |

| Thursday 30 May 12.00pm |

|

The ‘AI in Evaluation’ Great Debate |

|

For better or worse, AI is here to stay. Already it has achieved prominence in a range of domains, and it shows no signs of slowing down. Evaluation will be no different. Those of us in evaluation have a decision to make – do we adopt or do we reject using AI in our work? Are we willing to accept the constraints or limitations, for the ways it can tackle the more mundane aspects of our work? |

|

Presenters |

|

Team One |

|

David Fetterman is the author of 18 books and is the founder of Fetterman & Associates, David has worked in diverse contexts, including Japan, Brazil, Ethiopia, and Aotearoa. A former president of the American Evaluation Association, David has earned several prestigious awards and he was named the top anthropologist of the decade in 2020, which was celebrated in Times Square. |

|

Kristy Hornby is an Associate Director at Grosvenor who established and still runs its Victorian program |

|

Erum Rasheed is an evaluator with over a decade of experience generating evidence and insights for |

| Team Two |

|

Gerard Atkinson is a Director at ARTD Consultants who leads the Victorian practice and oversees the |

|

Michael Noetel is a psychologist and an academic trying to find the best ways of helping others, and to then |

|

Saville Kushner is Adjunct Professor at Drew University, USA, and Emeritus at the University of the West of |

|

Hosts |

|

Matt Healey is a Principal Consultant at First Person Consulting, and co-convenor of the Design |

|

Natalie Arthur is a Senior Consultant with Abt Global’s Australian Domestic Team. She is currently working on |

| Thursday 30 May 1.00pm |

|

FestEVAL Club |

|

Join us for the closing hour of FestEVAL at FestEVAL Club. This is a relaxed and interactive opportunity to work with others to explore how generative AI can support creative and flexible thinking. Be prepared to practice some of the learnings from the previous sessions and delve into some creativity and storytelling. |

|

Molly Jones is an early-career evaluator working in a specialist family violence and sexual assault setting. With a diverse professional background in secondary teaching, participatory-focused youth work, community engagement and organising, and design thinking and facilitating, she is interested in multidisciplinary and creative approaches to complex challenges - and how AI can be harnessed as a tool for this. |

Each session will be recorded and available on the AES YouTube channel approximately four weeks after the date. We suggest you subscribe to the AES YouTube channel to be notified when new recordings are added.

Session start times

-

VIC, NSW, ACT, TAS, QLD: 12.00pm

-

SA, NT: 11.30am

-

WA: 10.00am

-

New Zealand: 2.00pm

For other time zones please go to https://www.timeanddate.com/worldclock/converter.html

Please ensure you have access to your email address just prior to the start time to access Zoom details. Please also check your email address is correct on your registration form before submitting. Thanks.

Event Information

| Event Date | 28 May 2024 12:00pm |

| Event End Date | 30 May 2024 2:00pm |

| Cut Off Date | 29 May 2024 12:00pm |

| Location | Zoom |

| Categories | Special Online Event |

Kristy Hornby is an Associate Director at Grosvenor who established and still runs its Victorian program evaluation practice. She has completed over 310+ evaluation, review and strategy projects to date across more than a decade of practice. Experienced across mixed methods approaches she delivers evaluation services across the spectrum of program design advice, developing monitoring systems, conducting evaluations, building evaluation capability and mentoring new evaluators. She is always keen to experiment with new technology and methods to explore the ‘what if’ and ‘how come’. She has evaluated around 10 AI-enabled programs over her time and sees the advent of AI technology as a new frontier in evaluation practice which we all must grapple with, to remain contemporary in providing meaningful evaluations. Qualified with a BA, MBA and an MEvaluation (ongoing) she loves to make sense of complex contexts, connect with new people and have fun in her work. Kristy is also the Chair of IPAA Victoria’s Risk Community of Practice and has held multiple for-purpose director roles.

Kristy Hornby is an Associate Director at Grosvenor who established and still runs its Victorian program evaluation practice. She has completed over 310+ evaluation, review and strategy projects to date across more than a decade of practice. Experienced across mixed methods approaches she delivers evaluation services across the spectrum of program design advice, developing monitoring systems, conducting evaluations, building evaluation capability and mentoring new evaluators. She is always keen to experiment with new technology and methods to explore the ‘what if’ and ‘how come’. She has evaluated around 10 AI-enabled programs over her time and sees the advent of AI technology as a new frontier in evaluation practice which we all must grapple with, to remain contemporary in providing meaningful evaluations. Qualified with a BA, MBA and an MEvaluation (ongoing) she loves to make sense of complex contexts, connect with new people and have fun in her work. Kristy is also the Chair of IPAA Victoria’s Risk Community of Practice and has held multiple for-purpose director roles. renowned for developing the Empowerment Evaluation Approach. David brings 25 years of experience from Stanford University, where he held various roles, including faculty member at the School of Education, director of evaluation at the School of Medicine, and senior member of the administration. He also serves as a faculty member at Pacifica Graduate Institute and Adjunct Professor at Claremont Graduate University. Prior to his time at Stanford, he was a professor and research director at the California Institute of Integral Studies, Principal Research Scientist at the American Institutes for Research, and a senior associate at RMC Research Corporation. David has worked in diverse contexts, including Japan, Brazil, Ethiopia, and Aotearoa. A former president of the American Evaluation Association, David has earned several prestigious awards and he was named the top anthropologist of the decade in 2020, which was celebrated in Times Square.

renowned for developing the Empowerment Evaluation Approach. David brings 25 years of experience from Stanford University, where he held various roles, including faculty member at the School of Education, director of evaluation at the School of Medicine, and senior member of the administration. He also serves as a faculty member at Pacifica Graduate Institute and Adjunct Professor at Claremont Graduate University. Prior to his time at Stanford, he was a professor and research director at the California Institute of Integral Studies, Principal Research Scientist at the American Institutes for Research, and a senior associate at RMC Research Corporation. David has worked in diverse contexts, including Japan, Brazil, Ethiopia, and Aotearoa. A former president of the American Evaluation Association, David has earned several prestigious awards and he was named the top anthropologist of the decade in 2020, which was celebrated in Times Square. in Whanganui-a-Tara. Before discovering evaluation, Laurie’s first loves were philosophy and psychology. They have a research-focussed MA in psychology from Massey University and have worked in several mental health roles. Laurie has now been evaluating in the public sector for seven years, across multiple subject areas and methodologies. The position of evaluation at the intersection of knowledge generation and real-world impacts is a constant source of fascination for Laurie. They value mixed methods and are equally comfortable in qualitative and quantitative methodologies. Recently Laurie started a learning deep dive into AI tools and their potential for use in Evaluation, to which they bring a personal interest in Sci-Fi as well as their knowledge of philosophy, psychology, and evaluation.

in Whanganui-a-Tara. Before discovering evaluation, Laurie’s first loves were philosophy and psychology. They have a research-focussed MA in psychology from Massey University and have worked in several mental health roles. Laurie has now been evaluating in the public sector for seven years, across multiple subject areas and methodologies. The position of evaluation at the intersection of knowledge generation and real-world impacts is a constant source of fascination for Laurie. They value mixed methods and are equally comfortable in qualitative and quantitative methodologies. Recently Laurie started a learning deep dive into AI tools and their potential for use in Evaluation, to which they bring a personal interest in Sci-Fi as well as their knowledge of philosophy, psychology, and evaluation. She is an award-winning author and multidisciplinary researcher. Jori’s research is concerned with social inequalities and the overall rigor of social science research. Her work addresses issues of research methodology, cultural responsiveness, and the role of values and privilege within the fields of evaluation, education, and health. Hall has published numerous peer-reviewed works in scholarly venues; authored the book “Focus Groups: Culturally Responsive Approaches for Qualitative Inquiry and Program Evaluation” and was selected as a Leaders of Equitable Evaluation and Diversity (LEEAD) fellow by The Annie E. Casey Foundation. Jori was the 2020 recipient of the American Evaluation Association’s Multiethnic Issues in Evaluation Topical Interest Group Scholarly Leader Award for scholarship that has contributed to culturally responsive evaluation. She currently serves as a researcher for programs funded by the Spencer Foundation. She was also the Co-Editor-in-Chief for the “American Journal of Evaluation.”

She is an award-winning author and multidisciplinary researcher. Jori’s research is concerned with social inequalities and the overall rigor of social science research. Her work addresses issues of research methodology, cultural responsiveness, and the role of values and privilege within the fields of evaluation, education, and health. Hall has published numerous peer-reviewed works in scholarly venues; authored the book “Focus Groups: Culturally Responsive Approaches for Qualitative Inquiry and Program Evaluation” and was selected as a Leaders of Equitable Evaluation and Diversity (LEEAD) fellow by The Annie E. Casey Foundation. Jori was the 2020 recipient of the American Evaluation Association’s Multiethnic Issues in Evaluation Topical Interest Group Scholarly Leader Award for scholarship that has contributed to culturally responsive evaluation. She currently serves as a researcher for programs funded by the Spencer Foundation. She was also the Co-Editor-in-Chief for the “American Journal of Evaluation.” Australian woman of indigenous Celtic-Scottish and Irish-descent. Her father was Muslim while her mother, Catholic. Eva, a trained public health practitioner, applies pragmatic evaluation skills guided by prominent evaluation theories and methodologies that advocate collaboration, participation, equity, social justice and empowerment. She has held diverse roles in the international development, not-for-profit (NFP), Indigenous, State, and Federal Government sectors. Throughout her career, Eva has contributed to a variety of small, medium, and large-scale/complex health, education, and employment projects and programs, most of which have included various forms of evaluation capacity building with a focus on equity and cultural responsiveness. Eva is also the founding director of the Centre for Multicultural Policy and Program Evaluation and one of two Founding Chairs of the AES’ first Multicultural Special interest groups. In 2020, Eva was named an affiliate faculty member of the Center for Culturally Responsive Evaluation and Assessment (CREA), making her only one of two CREA faculty affiliates, based in Australia

Australian woman of indigenous Celtic-Scottish and Irish-descent. Her father was Muslim while her mother, Catholic. Eva, a trained public health practitioner, applies pragmatic evaluation skills guided by prominent evaluation theories and methodologies that advocate collaboration, participation, equity, social justice and empowerment. She has held diverse roles in the international development, not-for-profit (NFP), Indigenous, State, and Federal Government sectors. Throughout her career, Eva has contributed to a variety of small, medium, and large-scale/complex health, education, and employment projects and programs, most of which have included various forms of evaluation capacity building with a focus on equity and cultural responsiveness. Eva is also the founding director of the Centre for Multicultural Policy and Program Evaluation and one of two Founding Chairs of the AES’ first Multicultural Special interest groups. In 2020, Eva was named an affiliate faculty member of the Center for Culturally Responsive Evaluation and Assessment (CREA), making her only one of two CREA faculty affiliates, based in Australia in the public and not-for-profit sectors and aims to help organisations achieve better results for their clients and communities. Greg is an experienced evaluator having lednumerous evaluations of a diverse range of programs in the health, community service, environmental, disability and educational sectors, among others. As an evaluator, Greg sees himself as an advocate for change which necessarily involves establishing respectful, honest relationships with clients and providing a vehicle for the voice of those who are often not heard. Greg has been a member of the NSW AES C for some years and was a member of the working party that designed the FestEvals in 2020 and 2021.

in the public and not-for-profit sectors and aims to help organisations achieve better results for their clients and communities. Greg is an experienced evaluator having lednumerous evaluations of a diverse range of programs in the health, community service, environmental, disability and educational sectors, among others. As an evaluator, Greg sees himself as an advocate for change which necessarily involves establishing respectful, honest relationships with clients and providing a vehicle for the voice of those who are often not heard. Greg has been a member of the NSW AES C for some years and was a member of the working party that designed the FestEvals in 2020 and 2021. He’s been involved in helping customers understand and implement every major business technology innovation we have come to rely on. This includes, office automation, document and records management, enterprise resource planning, web, energy markets, immunogenetics, information and cyber security, and artificial intelligence. He enjoys working with customers to understand their needs and transform business operations using ICT as an enabler.

He’s been involved in helping customers understand and implement every major business technology innovation we have come to rely on. This includes, office automation, document and records management, enterprise resource planning, web, energy markets, immunogenetics, information and cyber security, and artificial intelligence. He enjoys working with customers to understand their needs and transform business operations using ICT as an enabler. government and social impact organisations. Generative AI was a game-changer for her, significantly enhancing her data analysis capabilities and enabling her to master complex data analysis and visualisation in Python and R—skills that once seemed daunting. However, she has also learned firsthand the pitfalls of AI, such as being misled by its convincing but fabricated outputs—often referred to as ‘hallucinations’. Currently, Erum is preparing her PhD application to delve deeper into the dual edges of generative AI.

government and social impact organisations. Generative AI was a game-changer for her, significantly enhancing her data analysis capabilities and enabling her to master complex data analysis and visualisation in Python and R—skills that once seemed daunting. However, she has also learned firsthand the pitfalls of AI, such as being misled by its convincing but fabricated outputs—often referred to as ‘hallucinations’. Currently, Erum is preparing her PhD application to delve deeper into the dual edges of generative AI. Learning and Development program for the firm. He has worked with big data and AI approaches for over 20 years, originally as a physicist then as a strategy consultant and evaluator. He has an MBA in Business Analytics focusing on the applications of machine learning to operational data. Gerard has previously presented at AES conferences on big data (2018) and on experimental tests of AI applications in evaluation (2023).

Learning and Development program for the firm. He has worked with big data and AI approaches for over 20 years, originally as a physicist then as a strategy consultant and evaluator. He has an MBA in Business Analytics focusing on the applications of machine learning to operational data. Gerard has previously presented at AES conferences on big data (2018) and on experimental tests of AI applications in evaluation (2023). put them into practice. He’s an awarded educator, including awards from the Australian Awards for University teaching. He’s a prolific early career researcher with over 35 publications in the last 5 years. He’s a Senior Lecturer in the School of Psychology at the University of Queensland and is the Chair of Effective Altruism Australia, a charity trying to help Australians find and fix the world's most pressing problems. Michael also recently led a nationally representative survey that revealed Australians are deeply concerned about the risks posed by AI.

put them into practice. He’s an awarded educator, including awards from the Australian Awards for University teaching. He’s a prolific early career researcher with over 35 publications in the last 5 years. He’s a Senior Lecturer in the School of Psychology at the University of Queensland and is the Chair of Effective Altruism Australia, a charity trying to help Australians find and fix the world's most pressing problems. Michael also recently led a nationally representative survey that revealed Australians are deeply concerned about the risks posed by AI.  England. He is a specialist in educational evaluation, and author of the book Personalising Evaluation in which he advocates for Democratic Evaluation. His most recent post was at the University of Auckland as Professor of Public Evaluation.

England. He is a specialist in educational evaluation, and author of the book Personalising Evaluation in which he advocates for Democratic Evaluation. His most recent post was at the University of Auckland as Professor of Public Evaluation. and Evaluation Special Interest Group. Matt is an avid adopter of different technologies and techniques, with his interest in AI top of the list. He has been using generative AI platforms to support his work, but also his cooking! Tip: ChatGPT is a great assistant when trying to figure out suitable substitutions in recipes.

and Evaluation Special Interest Group. Matt is an avid adopter of different technologies and techniques, with his interest in AI top of the list. He has been using generative AI platforms to support his work, but also his cooking! Tip: ChatGPT is a great assistant when trying to figure out suitable substitutions in recipes. several evaluation, co-design and strategy review projects with a focus on disability health, health workforce attraction, and Aboriginal and Torres Strait Islander wellbeing. Natalie was a program co-chair for AES23 in Brisbane. She is part of FestEVAL 2024 to soak up contemporary ideas on AI in the company of IRL intelligence.

several evaluation, co-design and strategy review projects with a focus on disability health, health workforce attraction, and Aboriginal and Torres Strait Islander wellbeing. Natalie was a program co-chair for AES23 in Brisbane. She is part of FestEVAL 2024 to soak up contemporary ideas on AI in the company of IRL intelligence.